Communicating with Your Students

Communicating with your students about A.I. is important. In addition to a general conversation about A.I., including the equity and privacy concerns around its use, you will want to talk with your students about when and how they may choose to use it for course work, and about circumstances where its use may not be appropriate or allowed. In addition to in-class discussions, these same ideas can be added to your syllabus or posted as text in Brightspace.

Starting Out: Talking with Your Students About A.I.

Regardless of whether you choose to consider A.I.’s impact on your course design, teaching, and assessment, it’s likely that students have heard about ChatGPT and other A.I., and may be using it, too. As such, it warrants an open conversation. As an emerging technology, we are in a unique circumstance where everyone (you and your students) is learning about A.I. at the same time.

You might ask your students what they know about these technologies. Have they used A.I. in their studies? What are they using it for? What do they think about it? You can then share your experiences with artificial intelligence.

You might also include in a general conversation with students about A.I.:

- Ethical concerns with using A.I., for instance, questionable labour practices, data privacy and security, and its intense use of energy and natural resources.

- Importance of students finding their own voice, rather than falling back on the generic voice of A.I.

- Value of developing foundational skills on their own (which may include the skill of using A.I. appropriately)

- Professional obligations to be well-trained in their chosen career

You might consider demonstrating, either in person or online through video what A.I. can do well and the limits to its capabilities, which may spark additional conversations.

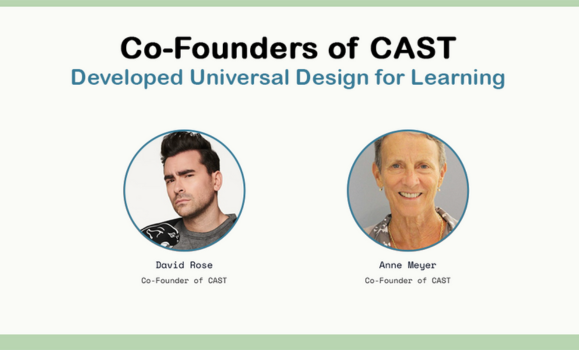

This slide, generated by A.I. based on a prompt about UDL, illustrates how A.I. sometimes generates inaccurate results. Although David Rose and Anne Meyer are the co-founders of CAST, the organization that first developed UDL, Dr. David H. Rose from CAST was never the fictional character (played by Dan Levy) on the TV show Schitt’s Creek, pictured here.

Talking with Students about When and How to Use A.I.

It’s important to be very clear with students about when they can or can't use artificial intelligence tools in their coursework. Emphasize, too, that these parameters will be different for each instructor, so they shouldn't make assumptions from course to course.

As a metacognitive exercise, ask students to consider how A.I. use is affecting their course work and thinking. Does using A.I. affect how students approach assignments, where they’re focusing their time and efforts, or how they study and learn? Long before the introduction of artificial intelligence, many of us transitioned from using acetates on overhead projectors to PowerPoint on a computer. This switch in technologies changed how we introduced material to students—the organization and sequencing of the content, the types and forms of content presented, and the overall look and feel of the content. This transition may parallel the shifts in students’ thinking with their use of A.I.

In addition to communicating with students about when it’s okay to use A.I., it is essential they know specifically how they can use it. Can students use A.I. tools for:

- Idea generation and brainstorming?

- Rephrasing and editing sentences?

- Explaining confusing ideas? Creating chapter summaries?

- Generating images? Code? Slide presentations?

Remember, students cannot be required to use ChatGPT or other A.I. tools in your courses.

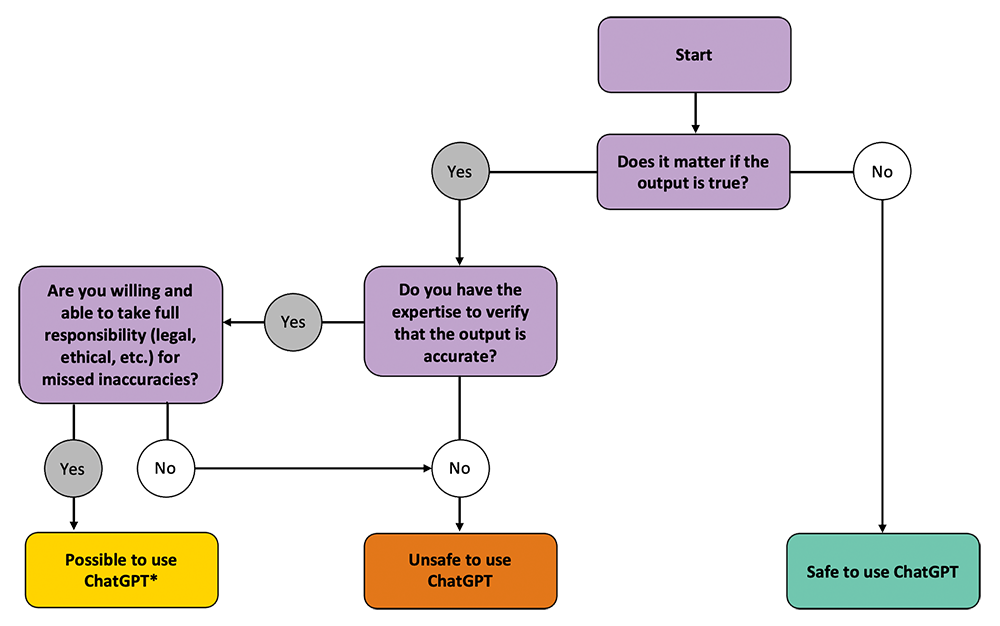

This graphic, included in UNESCO’s ChatGPT Quick Start Guide, might be fruitfully reproduced in your syllabus or assignment instructions to help students make decisions about using ChatGPT in light of its sometimes-inaccurate output. Be sure to note that while students may arrive at “Possible to use ChatGPT,” they should be sure to verify each output word and sentence for accuracy and common sense.

As A.I. tools can sometimes generate misinformation—incorrect facts, biased or offensive material, made-up references, etc.—students will need to take on the responsibility of checking the accuracy and any bias in the information that is produced.

Citing Artificial Intelligence (A.I.)

Dalhousie Libraries has included information on citations and artificial intelligence in its Citation Style Guide. As with any other quickly evolving technology, students should check this LibGuide frequently for updated information on citation practices.

Talking with Students about When NOT to Use A.I.

There may be circumstances when you do not want students to use A.I. in your course. Communicating with them about when not to use it is just as important as communicating when they can or should. In your conversation with students, explain why they are not allowed to (or should not) use A.I. This can be related to the learning outcomes for the course, or, more simply, tell students what they are learning and why it's important for them to do the work for themselves without any A.I. assistance.

The fact that students cannot use A.I. in your course needs to be communicated in as many places as possible, including your syllabus, announcements, assignment directions, and/or Brightspace.

And then, finally, to help avoid academic integrity concerns you could design assessments that make it more challenging or less tempting for students to use A.I. in completing them. See the Designing Assessments with A.I. in Mind section for more about assessment design.

Classroom Policies and Syllabus Statements for A.I. Tool Use

One other way of communicating with students is to write a classroom policy—either yourself before the start of the class or in concert with students. An ever-growing, collaborative document of classroom policies for A.I. generative tools can give you ideas for messages to include in your syllabus. Here is one example that was shared by Taylor O’Neal at Miami University:

“As most of us have had a chance to explore new A.I. tools like ChatGPT, they can be an amazing assist much like a calculator is for math classes. The best way to use it is for idea generation, synthesis, rephrasing, essentializing and gathering information about the typical understanding of a topic. However, it should be you that guides, verifies, and crafts your ultimate answers, so please don't just cut and paste without understanding. Let's leverage the tools as extensions of ourselves with a base of knowledge to make them powerful.”

Finally, there are two documents that may be useful as you consider A.I. and your teaching. First, the Generative A.I. in Teaching and Learning handout from the Faculty of Science has some other pointers for communicating with students. The Dalhousie Guiding Principles for LLMs and course delivery includes syllabus statements for courses where A.I. (1) can be used for learning and assessments, (2) can be used for learning only, and (3) use is restricted.